Deep Theory: The Philosophy of Information and Function

Why philosophy?

Most professional scientists dismiss philosophy as at best 'not relevant to their work' and many are quite scathing, regarding it as a self-indulgent waste of time (often phrased in far less polite terms*). If we are merely applying established scientific method to, e.g. test simple hypotheses or perform standard procedures, then indeed there is no need to return to the philosophical underpinnings of science every time.

Here, though, we are confronting a mystery that standard methods of science have so far failed to get a proper grasp of. The mystery is what makes the fundamental difference between the living and the inanimate, between alive and dead, in other words what exactly is life? Some would say this question is beyond the bounds of established empirical science. Some point to the lack of agreement among scientists, despite substantial efforts, to define life (Machery 2012). Others consider the question pointless and illusory because there is no natural boundary between living and not: a point well illustrated by the debate over whether viruses are alive (Van Regenmortel (2004; 2016). There are those, like the physicists and astrobiologists, Sara Walker and Paul Davies, who seek a new principle of physics to explain life - in terms of information control (2013, 2014). But the most fundamental criticism of conventional science comes from pioneering theoretical biologists such as Robert Rosen and Francisco Varela and Humberto Maturana and social psychologist Gregory Bateson who all identify reductionism, the machine metaphor and more fundamentally still, the mistaken idea that "everything is number" (Rosen 2000) and that cognition is matching an external reality (Maturana and Varela, 1980; Bateson, 1979). Rosen's criticism challenges the paradigm, not just of post-enlightenment science, but of mathematics and science since the 6th century BC! His argument seems cogent, though technically difficult for the non-mathematician it is not to be dismissed lightly. The more accessible criticism of the 'machine metaphor' in which life is seen as pure mechanism (see Marques and Brito (2014) for a review) is shared by all the critics I listed and very many more. The reason that reducing organisms to mechanism cannot explain how they live is that no mechanistic system or analysis is able to accommodate the self-recursive creation that is core to life.

Trying to discover what life is, in the deepest sense possible, turns out to require an understanding of how the parts work together to make and maintain one another. We are by now very good at reductionist explanation. That has been the mainstream of science for at least a century, but the methods and thinking needed to go the other way: to reconstruct, especially complex, systems, is rather less well developed and less visited by most scientists. Reductionism grew from a philosophy of science that emerged from the Enlightenment movement of empiricism. If we need a new paradigm to tackle deeper questions, we need to return to the place from where paradigms emerge: the philosophical roots of science. Whether or not life is beyond current physical explanation is challenge to reductionism. That is why it is useful to return to the underpinning philosophy of science as so many of those just mentioned did.

* For example the old joke: for maths all you need is a pencil, paper and a waste paper basket; for philosophy you just need the pencil and paper.

Language and meaning

The foundation of all systematic thinking (of which science is a part) is a set of concepts and words that are very precisely defined. In the course of developing a theory of life, scientists have repeatedly had to use concepts such as causation, function and autonomy. These sound simple to define, but just as with information, they are single words having to cover many technical meanings and that is a source of confusion.It is not at all easy to construct definitions for concepts that are strong enough to be the foundation of a rigorous scientific explanation. Philosophy is the preeminent intellectual tool for making ‘water tight’ definitions of concepts: it has a long established role in strictly defining language and concepts. The process of defining terms often reveals weaknesses and ambiguities in the concepts being expressed by them. This is where the rigor and wealth of intellectual experience offered by philosophy really counts.

Take for example the term ‘information’. As with all these sort of words, we first think we know what it means, but more focussed thought reveals a fuzzy picture of overlapping - and sometimes conflicting - concepts, broadly to do with information. Understanding what life is and how it works will require both a statistical notion of information as a reduction in uncertainty and an ontological concept of information as the form or ordering of material systems to make them what they are. The former we may often refer to as Shannon information and the latter concept may be named after its originator (as far as we know) - Aristotle. Lucciano Floridi is a modern-day pioneer in developing the precise language and concepts needed to work with information, from whom we have gained much inspiration. We have provided a glossary of the interconnected information language that we use on this website.

One of the defining features of living beings is that they ‘do things’, as Stuart Kauffman memorably put it. One way in which life is unique is the apparent ability of living organisms to initiate actions of their own choosing. This involves both causation (doing is causing) and autonomy (acting without the need for external intervention).

Cause itself is a major issue in philosophy, first formalised (as far as we know) by Aristotle (384-322 BC) and developed to a scientifically useful (e.g. quantifiable) modern concept through a long progress via enlightenment philosophers such as David Hume (1711-1776), early 20th century philosophers influenced by physics, such as Bertrand Russell (1872-1970) and contemporary workers such as the philosopher James Woodward and the statisticians Judea Pearl and Karl Friston. The role of cause in understanding life particularly arises from the apparent contradiction of circular causality - a thing that is the cause of itself. The conventional view is that circular causation is a logical impossibility, but the conventional view on causation is probably too narrow for working with the fundamentals of life as a phenomenon. On our pages about causation, we demonstrate that there are quite a few different concepts in the one word and at least one actually required circularity to operate.

If cause lies behind what happens, then for a complicated system, we might reasonably ask which parts are causal of the system’s behaviour and in what way. Those found to have an effect can be called functional for the system (according to Robert Rosen’s definition, function is what is lost when a functional component of system is removed). Ideas about function have developed mainly from the ‘machine metaphor’ and are, of course, strongly connected to ‘purpose’ and with that notion, they steer dangerously close to teleology (questions of why things do what they do in the sense of what they are ‘for’). Such questions are unremarkable when contemplating a human artifact, but are strictly taboo for scientific inquiry into the natural world. Again there is a philosophical literature on the nature and meaning of function and, especially based on the Etiological interpretation of Cummins (1975), we have developed a rigorous definition that helps understand living systems.

The function of components of a system which is causally closed by causal circularity is a contribution to the making and maintenance of the system: this idea being one of the main pillars of the theory of autopoiesis. An autopoietic system is causally independent (though dependent on its environment for raw materials and energy), but also able to sense changes in its environment and respond internally to them. The act of internally modelling the environment as a response to what is sensed is the other main pillar: what Varela and Maturana termed ‘cognition’. In all non-living systems what happens is an inevitable consequence of the chain of cause and effect: internal changes have no option but to follow environmental changes in a pre-determined way. Living systems alone are able to break the chain and transform cause-effect relations into perception-response relations because their internal states and even their internal structure are of their own making. From this fact alone, concepts such as function and autonomy become meaningful.

That leads us to autonomy - not surprisingly another venerable topic in philosophy and one closely connected with questions of determinism and free will. At first sight, the very idea of autonomy seems to contradict determinism. The answer, we think, is that cause is the result of information constraining the action of physical forces and if a system is causally closed (by circularity), then it can have within it ‘private’ embodied information that provides a source of constraint and therefore of cause from within the system. This may well be what lies behind the autonomy of organisms.

Finally and I suppose ultimately, we are forced to think about how things exist at all, how did life and living things come to be. That is because we cannot really understand life until we understand how it came into existence without the help of other life (as all existing examples of life are created by the already living). Answering such existential questions is the domain of an important branch of philosophy called ontology. Broadly, it tries to understand the nature of reality: what causes things to be at all and why they are a particular way and not otherwise. Causal loops can refer to the way states of a system are determined (e.g. in metabolic pathways, cell signalling networks or brains), but to understand life, we need to contemplate causal loops of being, where one subsystem brings another into being, which in turn brings the first into being. This fundamental property, summarised as autopoiesis, is ontological in nature and poses a substantial challenge to the existing philosophy of science, as Robert Rosen so clearly saw.

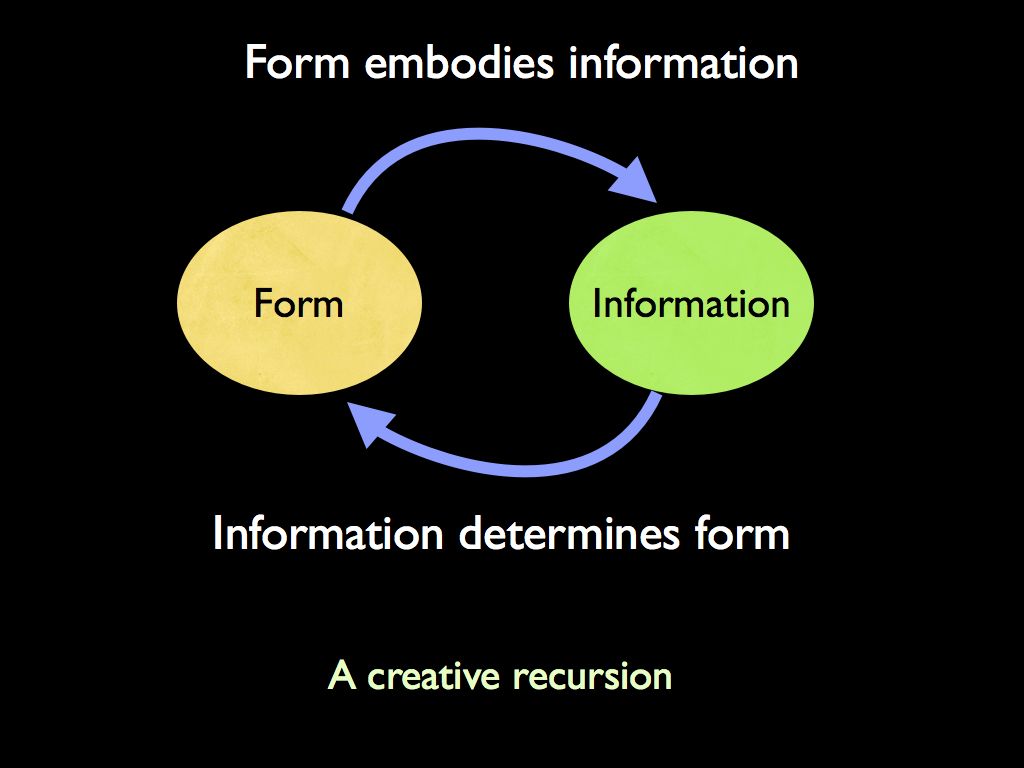

Aristotle's information

A growing movement in this subject takes the view that information itself is the essence of existence. In this case, the word ‘information’ does not refer to information about things, but to the physical information embodied in the form of things (information). The thinking behind this website subscribes to the philosophical proposition that matter and energy are arranged in space and time by information, to create the variety of identifiable physical objects and phenomena of the universe. In other words, matter and energy (the stuff we normally think of as the essence of existence) are merely the inert clay - the raw material - for composing the universe, information is what makes it what it is. Many attribute the origin of this ontological view to Aristotle (so sometimes philosophers refer to an Aristotelean meaning of ‘information’. In this view, it is a specific arrangement of fundamental particles within space and time, that makes any single thing what it is and also determines its properties and behaviour. The particular arrangement is a pattern and the pattern is information - specifying the particular taken from an assembly of all possible patterns.Given this information-centric picture of reality, life can itself be seen as an informational system that maintains, replicates and refines itself (all in the medium of biochemistry). The answer to how it does all that is to be found in the laws and behaviours of information, not fundamentally in the mechanisms of biochemistry, though they are important for its realisation of course. Indeed the material restrictions of biochemistry are precisely what makes the information-level construction of life possible, since these restrictions provide the 'grammatical constraints' needed for a meaningful language: the rules by which structural components and their interactions are defined. Out of constraints comes creativity, just as it is with literature, music and art (which obviously would not exist without some rules). This idea of information is the basis for separating organisation from material and efficient cause from material cause: the former being the necessity of one thing, given another, the latter being the constraint on this imposed by the substances involved. It shows us organisation as multiply realisable, separate form its material embodiment. This enables us to concentrate on the 'physics of organisation' through relational modeling, cybernetic and information theory, rather than being bogged down in the biochemical mechanisms.

This notion of 'information' does not replace the 'reduction in uncertainty' quantified in Shannon's information theory. It is important to realise that the two are separate (but related) meanings. Shannon's idea of information is useful for quantification of may be 'read' from the form of an object or system. Shannon's information is strictly relational though, since he developed it with transmission between a source and receiver: this is a fundamental difference from Aristotelean information, which is intrinsic to the system.

Causation, Autonomy and Function,

One of the unique features of living systems (from cells and bodies to ecological communities) is that they seem to do things of their own accord, without being necessarily caused to do them by their surroundings. As Physicist Paul Davies put it: "throw a stone in the air and we can easily predict its trajectory, throw a bird in the air and it's direction of movement is anyone's guess". This autonomy of action (which is a feature of even the simplest microbes) has long fascinated scientists and been an inspiration for the 'Schrödinger question': "What is Life?". In his book "Life Itself", Robert Rosen (a leading thinker in this field) noted how René Descartes, who was impressed with the mechanical automata of his day, which behaved as if they were autonomous, established the machine paradigm. Descartes believed not that machines were in some respects life-like, but that life itself was an example of machine - and this misconception has pervaded biology ever since. A large part of Rosen's seminal book is devoted to exposing and eradicating this mistake: life, in a deep and scientifically crucial way, is not member of the class 'machine'. He realised that the difference between machines and life was a matter of organisation, in particular, the irrevocable limitation of machines (as formally defined by Turing) to encompass self-entailment.

Taking up this theme, Stuart Kauffman points out that for the non-living world physics obviously gives us a sufficient explanation, since it describes what happens to objects, but organisms are not merely reacting to forces, they actually do things, i.e. they are sources of happening. The phenomena of agency and autonomy are peculiar to life and explained in terms of self-entailment, in the abstract by Rosen's theory and in a more biologically specific way by Maturana and Varela's theory of autopoiesis the making and maintaining of oneself.

The consequence of this for being "the author of ones own actions" is taken up in arguments about 'free will', for which the provision of a scientific explanation is part of the IFB project (see Autonomy and Downward Causation).

With the ability to initiate actions, the question of why this and not that action, obviously arises. Answering this question entails understanding of biological function. Our use of Stuart Kauffman's concept of a Kantian Whole , defined by causal closure, and the idea of life organised as a nested hierarchy of systems of components belonging to functional equivalent classes (promoted in biology by Denis Noble) led to a more precise rendering of the Cummins (1975) definition: ‘function’ is an objective account of the contribution made by a system’s component to the ‘capacity’ of the whole system. Our definition of biological function is this:

A biological function is a process enacted by a biological system A at emergent level n which influences one or more processes of a system B at level n+1, of which A is a component part.

(Farnsworth et al. 2017).

The Autonomy of Organisms

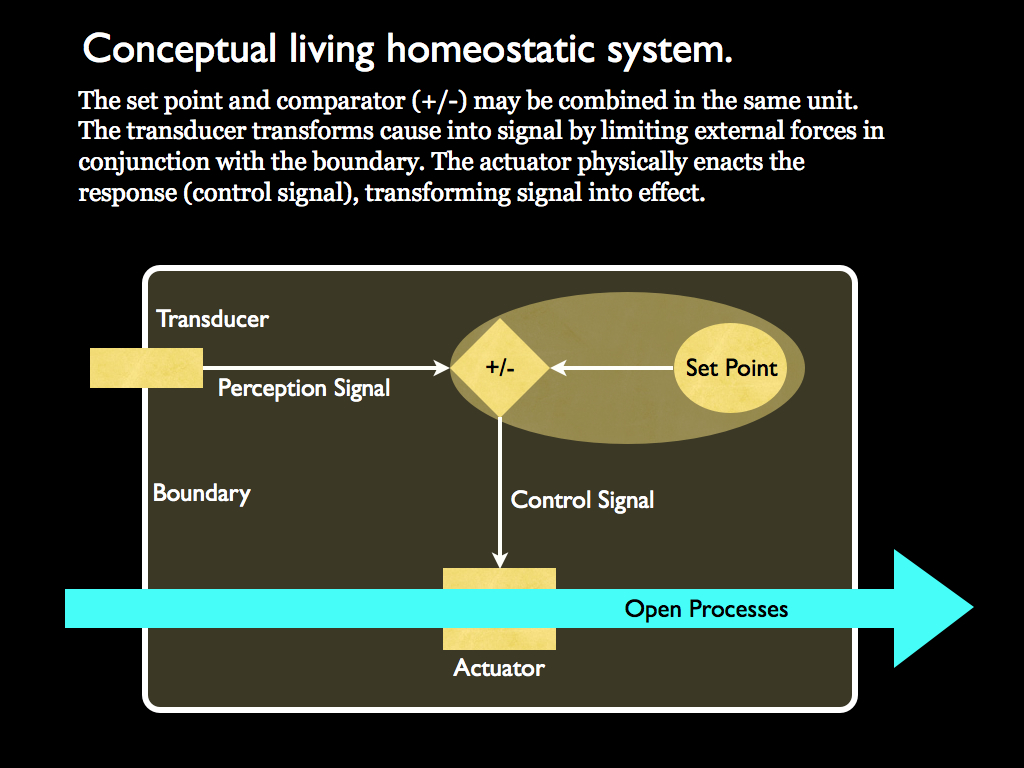

Whether or not we (humans) have free-will, or even what that means is one of the oldest and most studied of philosophical questions. It is surprising that so little effort has yet been devoted to addressing these questions in a) broader terms of organisms or systems in general and b) scientific and especially cybernetic terms. Here, we find that the cybernetic (informational) approach to questions of autonomy, agency and free-will (closely aligned with an inquiry into causation and closure) reveal something very special (even unique and definitive) about living systems. This is taken up on the autonomypage and those to which it links. The conclusion is that organisms are able to express autonomy (as freedom from exogenous control) by virtue of being organisationally closed and that through evolution, systems of ever increasing power and sophistication have developed to allow this to expand, first to selecting appropriate actions from a behavioral repertoire, then being able to evaluate potential actions in terms of the best interests of the self and eventually to higher levels of free-will. The basic and foundational element of this action-selection autonomy is the homeostatic system which includes the embodied information of a 'set-point' and this 'private' information is the source of agency in all organisms (see diagram). The more scientific approach followed here shows that free-will is not an all-or-nothing attribute, but rather a property of organisationally closed systems that exists in degrees (i.e. on an ordinal scale) and presumably humans show it's highest degree (as far as we know), but even humans do not have unlimited free will. This is not a mere re-definition and deviation from the standard philosophical concept of free will, it is entirely compatible with that, but it produces conclusions that many conventional philosophers currently find unpalatable. These ideas are presented in Farnsworth (2017; 2018).

It would be very interesting to find that the quintessential feature of life is the autonomy that arises from it's being autopoietic and algorithmic (cybernetic), so that it has the organisational closure properties at multiple scales of a nested hierarchy, each of which creates function and the whole thing is a sort of computer, computing itself - and all of this is information processing.

References

Bateson, G. (1979). Mind and Nature. Dutton, New York.

Farnsworth, K.D. (2017). Can a Robot Have Free Will? Entropy. 19, 237; doi:10.3390/e19050237

Farnsworth, K.D. (2018). How organisms gained causal independence and how to quantify it. Biology.

Farnsworth, K.D.; Albantakis, L.; Caruso, T. (2017). Unifying concepts of biological function from molecules to ecosystems. Oikos, doi: 10.1111/oik.04171.

Machery, E. (2012). Why I stopped worrying about the definition of life... and why you should as well. Synthese, 185(1), 145-164.

Marques, V. and Brito, C. (2014). The Rise and Fall of the Machine Metaphor: Organisational similarities and differences between machines and living beings. Verifiche, XLIII, 1-3.

Maturana, H. and Varela, F. J. (1980). Autopoiesis and Cognition: the Realization of the Living. D. Reidel Publishing Company, Dordrecht, NL. English translation of original: De Maquinas y seres vivos. Universitaria Santiago.

Van Regenmortel, M. H. V. (2004). Reductionism and complexity in molecular biology. EMBO Reports, 5, 1016e1020.

Van Regenmortel, M. H. V. (2016). The metaphor that viruses are living is alive and well, but it is no more than a metaphor. Studies in History and Philosophy of Biological and Biomedical Sciences, 59:117–124.

Walker, S.; Davies, P. (2013). The algorithmic origins of life. J. R. Soc. Interface, 10.

Walker, S.; Davies, P. (2017). Chapter 2 The Hard Problem of Life. In From Matter to Life; Cambridge University Press: Cambridge, UK. pp. 17–31.

Summary

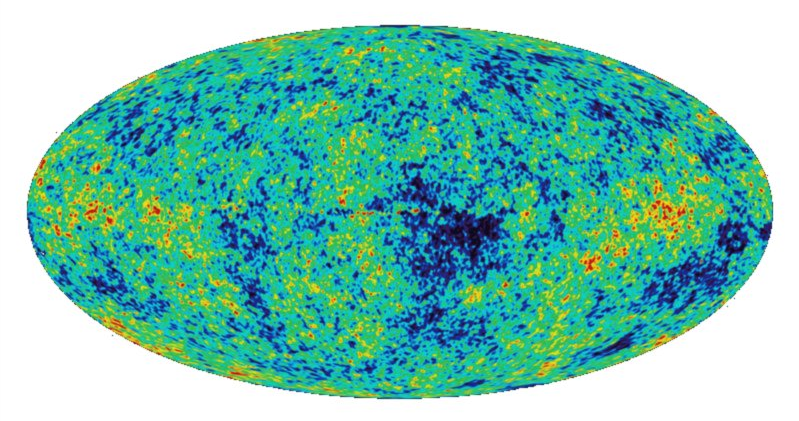

The illustration for this theme is a map of the 'cosmic background radiation', showing the earliest observable (to-date) large-scale pattern created by information in the universe. Life can also be interpreted as a concentration of pattern, instantiating and processing information. In this theme, we explore the fundamental concepts used to elaborate and build theories with these ideas. This includes an examination of the difference (and connections) between the statistical theory of information and a more general understanding of information as 'meaningful' pattern*.

The result is that an essential characteristic of information, in the creation and maintenance of life, is functionality. Function and information are integral to the concept of causation and causation is the 'raw material' for autonomy. The big bang created a cascade of cause-effect chains that led to the creation of life (at least on this planet), but from then on, apparently new chains began to appear - these ones generated from within the newly created organisms. What a marvel! Many people will look at the night sky, seeing billions of stars on a clear night and think "how small and insignificant am I". But now look at it in the light, not of specks of stellar radiation, but of concentrations of information and its processing. Now realise that we, complex living organisms, are by far the brightest stars in the known universe.

*(‘Meaning’ is a vague and confusing term, which we attempt to pin down and generalise, rendering it suitable for objective analysis here).

This Theme seeks to:

- Define Information: take a look here for a start at defining 'information' language.

- Define causation and explain how causal relationships can be used to model living systems.

- Define Function: we have a go on this page (but it should be considered alongside the

definition of information).

- Explain how these enable us to understand how functional information constitutes the multiple levels of life: try this page.

- Explain how organisms differ from all other systems (via their autonomy) by using cybernetic theory.