The Autonomy of Life

A short article by Keith Farnsworth. Major update in January 2022 (relatively minor update in September 2023). It should be read in conjunction with the page on concepts of closure if you have not already seen that one.What is Autonomy?

At the simplest level, autonomy literally means 'living by one's own laws', from the Greek: auto (self) and nomos (law). But as usual, this seemingly simple word proves hard to define because it has to convey a range of concepts for which we really need several different words.

Cognitive Scientist Margaret Boden, put it this way: "Very broadly speaking, autonomy is self-determination: the ability to do what one does independently, without being forced so to do by some outside power" (Boden, 2008) (note the 'one' here is by no means necessarily a person). She identifies three aspects of system control (I might substitute 'causation') as necessary for autonomy: a) the extent to which responses to the environment are "mediated by inner mechanisms", b) the extent to which the (inner) "controlling mechanisms are self-generated rather than externally imposed" and c) the extent to which these inner mechanisms can be "reflected upon" and modified to better suit the objectives of the system in the light of the current situation.

Her use of the term "reflect upon" is unfortunate as it implies conscious cognition - I hope to pursuade you that autonomy is a property that does not depend consciousness and is certainly not limited to human beings.

Still, it does seem that:

a) freedom from direct control by the environment,

b) self-making of the mechanisms of internal control and

c) adaptation of those mechanisms to suit a changing environment

are the three main characteristics of autonomy. We already know that these attributes belong to a living cell; let us then consider them in application to any system in general and see how far that can extend back from the human being.

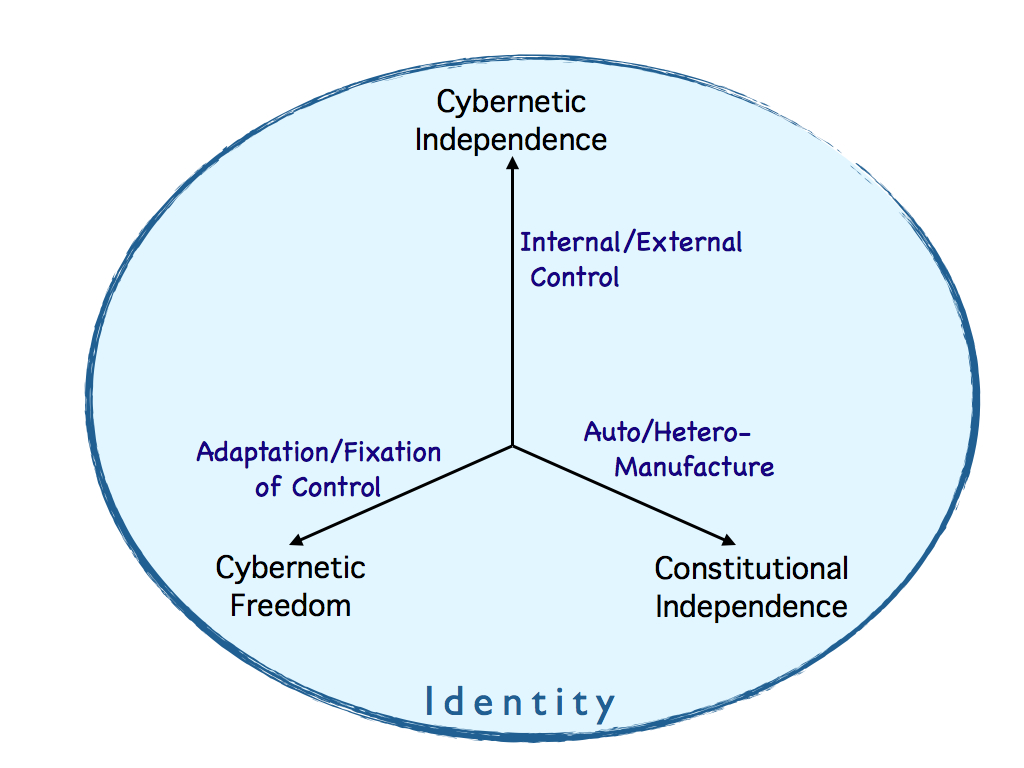

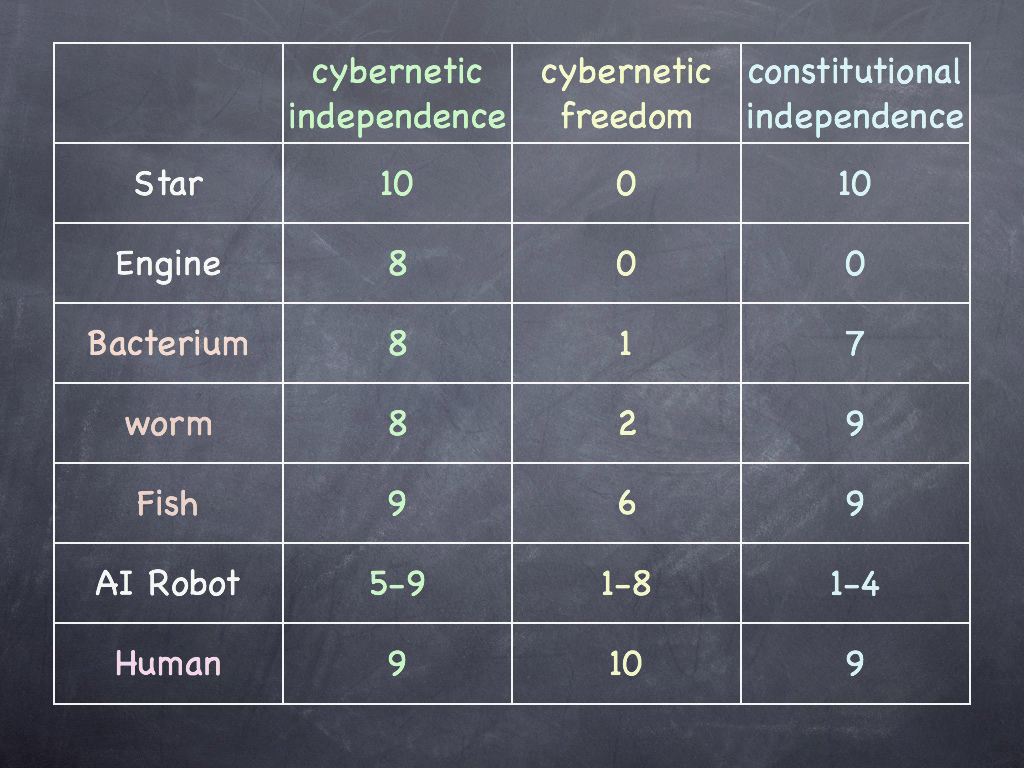

We can see that autonomy is not all or nothing: the three attributes listed above each describe an aspect of the ratio of control that is self-generated over that externally determined. Each attribute of autonomy can be quantified by a continuous variable. We can identify the three ratio-measure axes of autonomy as: internal/external control (cybernetic independence), adaptation/fixation of control (cybernetic freedom) and auto/hetero-manufacture (constitutional independence) - illustrated below.

my

copyright

From this we immediately have the idea of degrees of autonomy - a system may have a high level of cybernetic independence, but little or no constitutional independence; it may enjoy some independence in all three aspects, but each only partially. In other words we are in a position to quantify autonomy in a three conceptually different, necessary and probably sufficient dimensions of variation.

To organise our thoughts, let us now consider the relationship between seemingly similar words to establish a precise language for 'autonomy talk'.

Autonomy and agency

What exactly do we mean by 'control' when we talk of self-control and independent control? For a physical system control means efficient cause - how things are made to happen by organised physical forces (see the physical basis of causation). It also carries the notion of constraint: control is the constraint of a system's range of possibilities, i.e. the limitation of its degrees of freedom of possible states to get into. Self control is then the organisation of forces exerted by parts of a system to limit the range of freedom of that system. Too many degrees of freedom and a thing becomes loose, chaotic and falls apart like a raw egg without its shell. Too little freedom and it becomes rigid and incapable of any kind of movement that can do work, i.e. it cannot perform physical actions (think of a seized engine).

Agency is the ability of a system to act in the physical world.

If it can exert organised forces on other systems (or its environoment) to effect or control them, then it has agency. But we should be careful to realise that this implies that the forces eminate from within the system itself - and so does the organisation of them that makes these forces coherent and effective. Recalling that efficient cause is the combination of physical force with formal (information) constraint, we see that agency requires forces and organisation to both originate from within the system. Since physical forces all eminate from sub-atomic particles, it is not hard to see that this requires those particles to be part of the system. Since the organisation of the forces into efficient cause results from the information embodied by the system as its form (the arrangement of the particles in space and time) it also requires the particles to belong only to the system itself. These two conditions imply that the system is identifiable as an entity, separate from its surroundings. In other words, agency requires identity.

Autonomy and Identity

The philosopher John Collier (2002) started his definition of autonomy with this simple definition: "autonomous systems both produce their own governance and use that governance to maintain themselves". He then explains that "autonomy is closely related to individuality", which in combination with self governance provides "independent functionality".

I would go further than "closely related": self-governance makes no sense unless there is an identifiable self to self-govern, so it seems reasonable to argue that identity (selfhood) is a necessary pre-condition of autonomy. Collier is more subtle on this point, coining the term cohesion, based on his idea of unity "which is the the closure of relations between parts of things that make it a whole". This means for a system to even qualify for autonomy, it has to be composed of parts that each interact with at least one other of the member parts (that is the technical meaning of closure).

When these interactions are physical (force mediated), Collier terms the unity a case of cohesion. The trouble with this idea is that all physical parts (most fundamentally elementary particles), that are not strictly isolated, do interact with one another: the whole universe is in cohesion the way he has defined it. Collier suggests that a boundary is formed around a 'cohesion' by a discontinuity in the strength of interactions - a unity is formed from parts that interact more strongly with one another, giving (not very convincing) examples of atomic forces among atoms in a crystal and a gas in a box (for which the box is the boundary). Since this leaves us to rather arbitrarily identify the boundary, I don't think it is a great step forward. More usefully, Collier goes on to say that "autonomy is a special type of cohesion": a kind that is actively maintained by the interactions among its component parts. The physical activity requires physical work to be done (organised forces again). That is much more like Immanuel Kant's idea of a whole: the parts all contributing to the continued existence of the whole, which in return, does the same for each of its parts. Indeed, identifying the organisational interdependency of the parts is a strong foundation for understanding both identity and autonomy (and the connection between them). That is: a system can only be autonomous if, at least, the parts of the system are organised to form a Kantian whole.

Immanuel Kant (1724-1804) portrait

by

Johann Gottlieb Becker, 1768 (likely to be one of the most

representative - public domain).

Immanuel Kant (1724-1804) portrait

by

Johann Gottlieb Becker, 1768 (likely to be one of the most

representative - public domain). Kant argued that an organism is a system of whole and parts, the whole being the product of the parts, but the parts being dependent on the whole for their existence and proper functioning. This extraudinary leap predates the organisational view of biological systems by about 150 years and was rather incidental to Kant's main argument, which really had to do with the way people (then) thought about nature in terms of design - [and many people still do] (see Guyer 1998 for details).

Specifically, the idea that living organisms, particularly, require an explanation of agency because they are recognised as wholes (in which component parts all have a role), was first formalised by Immanuel Kant (1724-1804) in his “Critique of Teleological Judgment” (well described in Ginsborg, 2006). As Kant put it: for something to be judged as a natural end "it is required that its parts altogether reciprocally produce one another, as far as both their form and combination is concerned, and thus produce a whole out of their own causality". A system composed of parts which in turn owe their existence to that of the system was accordingly termed a ‘Kantian whole’ by Stuart Kauffman (Kauffman and Clayton 2006) and it seems a pity that his terminology has not caught on yet.

There can be little doubt that being a 'Kantian whole' would qualify a system for autonomy. But I prefer to define identity with less restriction, as the property of a system which arrised from it being organisationally closed. This definition, which technically means that its parts are identifiable as members of a system of transitively related interactions [see closure for details], includes any system for which every part in some way constrains the behaviour of every other part.

I prefer that because requiring the 'Kantian whole' property sets a very high threshold for autonomy. Not only does the system have to govern itself, it also has to be responsible for making itself in the first place: that is it needs constitutive autonomy as well as cybernetic autonomy. To an extent, living organisms do exactly that, but even for them, self-making is not strictly true, since every organism alive today was created by at least one parent organism. That brings us to constitutive autonomy.

Constitutive Autonomy

We have to include this self-manufacture (constitutional independence) in our definition because if a thing is not the creator of itself, then it did not determine the constitutional causes of its behaviour (its form), so it owes a degree of its behaviour to an exogenous control, thereby loosing autonomy. We all owe at least that much to our parents.

Once made, the first duty of any autonomous system is to maintain its functional integrity, for without that it can do nothing else. Intuitively, the more it is able to maintain itself the more autonomous it must be. Unless a system was entirely and spontaneously self-creating, during the early stage of its existence, when it was incomplete, it would need the help (intervention) of some other system to maintain it. Again, the obvious example is the dependence of a new organism on its parent(s), including the inheritance of their genes, cytoplasm and cellular membrane (e.g. among bacteria) and the support required in this early stage. A different example is the dependence of a robot on its makers and those who maintain it. Once fully formed, organisms meet the requirements for constitutive autonomy and there is no reason in principle why a robot could not also, though our technology does not yet extend nearly that far.

The everyday meaning of autonomy lacks this constitutive requirement and the common usage of the word in artificial intelligence (AI) usually excludes the creation phase (we don't yet require autonomous artefacts to be self-making). But theoretical biologists examining the fundamental nature of life have taken constitutive autonomy all the way and insist that for true autonomy a system must be the creator and maintainer of its own organisational structure. Indeed it is one of the core ideas behind my own conclusion in the paper "Can a Robot have Free Will?" (Farnsworth 2017) - more on that shortly. We should take care to notice, however, that the only things for which constitutive autonomy might be strictly true are a) the universe as a whole and b) life as a whole, though there is still room for doubt for even these. (The reason for these exceptions is that our best scientific explanation for the universe is spontaneous emergence and - somewhat tautologically - if life is defined as autopoiesis , it too must have been spontaneously emergent).

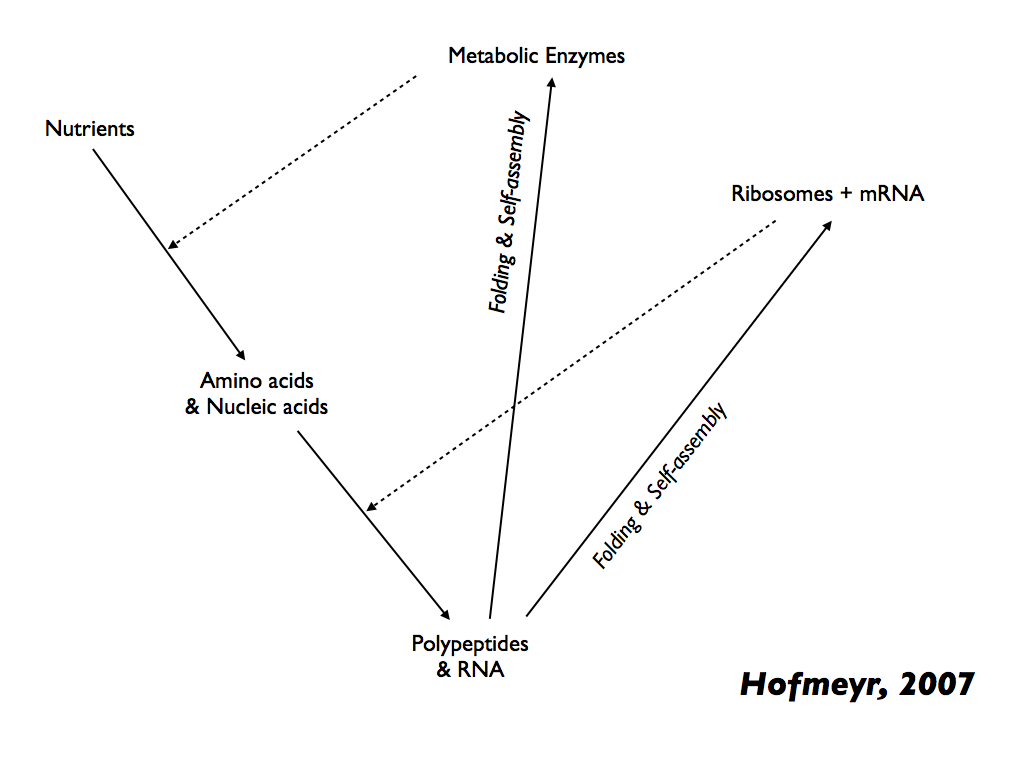

So far the best explanation for any kind of self-making and self-maintaining system is Hofmayr's (2021) model of the self-manufacturing cell, featured in our blogpost here). The simplest meaningful example is his earlier (2007) representation of a cell (below) in which material causes (solid arrows) combine with efficient causes (dotted arrows) to form a system with closure to efficient causation in which nutrients are transformed into the biomolecules needed for the transformations to take place [you can see all this explained on the autopoiesis page].

Among non-living things, the closest I have found is this (Griffith, Goldwater and Jacobson, 2005) fascinating attempt at autonomously self-assembling robotics (it is, though, a long way from self manufacture). Clearly, constitutive autonomy is a difficult criterion to satisfy and has only been managed, for certain, by life. That is a fact which I find highly significant.

Autonomy and independence are often used interchangeably, but are not the same. Independence has two meanings, the more common language one of 'freedom from external control' may be aligned with what I am calling cybernetic independence. An independent system is able to determine its own actions. The other, slightly more technical meaning of independence is 'unaffected by other variables', as in: 'the speed of the engine is independent of its temperature'. If a system is entirely independent in this sense, then nothing in its environment can affect what it does. That would be a negation of what I call cybernetic freedom because it denies the system any ability to respond or adapt. If a system is completely independent in this sense, then its behaviour in no way relates to its environment and it could only be called autonomous in the trivial sense that it is isolated from the world around it. Systems with no ability to sense their environment, let alone to respond to it, are not really autonomous because they are fixed in their behaviour. The only possible source for determining their behaviour is their form and that, as we have seen, is determined by a preceding system. A clock is a good example of this sort of independence without autonomy: it does only what its structure determines and it does that irrespective of the world around it, until it runs out of energy or its thermodynamic cycle is broken (e.g. by switching it off).

On the other hand an autonomous person can be extremely sensitive to their surroundings, constantly and precisely adjusting their behaviour to every small change like a ballerina balancing on point. This person is not independent in the narrow technical sense, but is exercising considerable cybernetic independence if their behavioural adjustments follow an internally generated scheme of responses (as she will tell you they do). Contrast that with the proverbial leaf floating on the water, for which the behaviour is entirely and directly determined by the wind and current (external forces): the leaf has no cybernetic independence at all.

But a leaf does not have to move macroscopically to assert its cybernetic independence - if it is alive, then it will open and close its stomata in response to light (and maybe moisture) in its environment and to some extent, it will decide when different metabolic and catabolic processes occur in its cells. All these microscopic movements of cells and biomolecules are under the direction of an embodied algorithm - something more than embodied information. It is the result of information over and above form (i.e. what the clock lacks) This extra information is the information that provides alternative states (cybernetic freedom) through molecular switches that are based on shape-chaning (conformational) patterns in its constituent proteins. Causal connection among these conformation changing proteins produces exactly the effect we see in connections among switching transistors in a computer: it stores and implements (runs) an algorithm in which organised and rational responses are made internally to external stimuli. These stimuli are the result of detecting events in the external environment, via transducers (molecular receptors).

Of course a living leaf is only autonomous to some extent because if it is attached to the rest of the plant, as it should be, then these processes of living are also under the control of other parts of the plant. The plant as a whole is united as a Kantian whole that has causal sovereignty over the leaf and can act independently of exogenous control, whilst being influenced by environmental changes, e.g. in light level. The crucial difference between independence and its opposite is found at the boundary of a system that has identity (so that it has a causal boundary). This difference is between cause and effect (the inevitability of outcome to any force) and signal and response - the optional outcome determined, at least in part, by internally generated control in response to an exogenous force. This crucial difference is only possible if the system has all of a) internal (self) control, b) a boundary which can strip the information content of forces from their magnitudes and c) transducers that can convey that information to the internal control system. These are joint attributes of all living systems [see explanation of homeostasis on the autopoiesis page].

Autonomy and freedom are therefore also linked as the autonomous agent must be free to act as it determines, not be a slave to externally imposed cause and effect. The freedom is both freedom from exogenous control (the common language meaning) and the freedom provided by a breadth of options for self-control, i.e. the degrees of freedom available to the system. This is the number of available internal states of the system, given its current state. It is provided for by the molecular switches just mentioned and is less precisely referred to by philosophers of free will as 'leeway freedom'. The idea that this sort of freedom arises from molecular switching is strongly argued by Ellis and Kopel (2019), though more deeply, it is the pure information algorithm embodied at the system level (together with a separate formal embodiment, especially DNA for living systems) that determines the behaviour. This information exercises control by being the constraint on physical forces (at the atomic scale) among biomolecules (the inter-molecular electrostatic forces that give action to biochemistry - binding and cleaving molecules, attracting and repelling, as in hydrophobic /hydrophilic forces) [again, explained on the physical basis of causation].

Three Axes of Autonomy

All this led to the conclusion that there are in fact three empircally independent axes of continuous variation in autonomy - as illustrated with examples below (from Farnsworth 2023).

The three primary axes of

autonomy and freedom, showing with examples that these are effectively

continuous variables (not presence / absence) and although

hierarchically dependent, they are empirically independent

(orthogonal)

variables.

From Farnsworth, 2023.

From Farnsworth, 2023.

Although these axes describe independent metrics of autonomy (each is a ratio of internal to external causation), the aspects of autonomy they measure are built hierarchically in life: cybernetic freedom depends on cybernetic independence and that in turn depends on constitutional independence. Non-living systems (e.g. technology) do not necessarily depend on that hierarchy.

Looking at the diagram, a star is self-made to a limited extent, but has no cybernetic control, so is placed at zero on the two cybernetic axes. Automata and computers are entirely dependent constitutively, but have some independence of cybernetic operation and they range in degrees of freedom from very little (the automaton) to large (the universal computer). Organisms being autopoietic systems have significant constitutive independence and being self-regulated have very considerable cybernetic independence, but they vary in cybernetic freedom from rather little (the microbe) to the great flexibility of behaviour seen in e.g. octopus.

To understand consitutional independence better, we need to return to considering closure, especially of constraints and causality.

Closure of constraints

Leading proponents of the organisational theory of biology - Alvano Moreno, Matteo Mossio and Leonardo Bich - explain autonomy as the consequence of a closure of constraints that leads to internalisation of causation, identity (their concept is close to the Kantian whole) and function (teleology or purpose). We are reminded that purpose can only be defined in terms of a Kantian whole because it is only for that sort of system that 'aims and goals' make any sense. These ideas are laid out in detail and summarised in Moreno and Mossio's book (2015).

I think the crux of their theory was the innovation presented in Montévil and Mossio (2015) where they showed constraints as causative, to which I add that they are specifically formal cause, which is the informational part of efficient cause (remember the other part is physical force as explained here). Closure of constraints means that all the constraints of a system make one another by their constraining action. We only see this phenomenon in living systems.

Most importantly these authors claim that biological function is the closure of constraints in a biological system. Function is a special kind of action (efficient cause) because it is a contribution towards the maintenance and action of the whole system. Their interpretation therefore amounts to saying that when every constraint contributes to the making and maintaining of every constraint in a constraint-closed system, the totality of constraints becomes a biological function.

In the organisational approach to biology, closure of constraints leads directly to identity, the possibility of function and the emergence of autonomy. As the page on closure explains, there are several kinds of closure and the difference between cybernetic and ontological causal closure is important here. A system with cybernetic causal closure is self-governing in the sense that all the constraints embodied within it collectively create the set of states and transitions among those states, without exogenous control: the constraints act on one another to produce one another. This circular causation ensures cybernetic independence. It is possible to interpret the artificial neural networks (ANNs) in Albantakis (2021) as examples of systems with quantifiable degrees of cybernetic closure (which she measures in various ways). Larissa Albantakis uses autonomy to mean "whether a system’s actions are determined by its internal mechanisms, as opposed to external influences" , which is roughly what I am calling cybernetic independence. Some of her ANNs also show cybernetic freedom because they are able to take more than one path through internal states, according to the environmental circumstances they encounter. She also quantifies the degree to which they achieve cybernetic (control) closure, so in all, her analysis, though preliminary, is an important step to placing all this on a scientific footing.

Systems with ontological causal closure have constitutional as well as cybernetic independence. Their constraints are embodied in forms that are created by the collective of all member embodied forms. Such a system is necessarily a physically embodied system that makes and maintains itself (autopoiesis). For it, the closure of constraints is the closure of efficient causes: the parts that make up the whole literally bring one another into being. Only life is able to do that (so far). The ANNs referred to above are not autonomous in this ontological, physical sense because their existence wholly depends on the computational system used to instantiate them - they are strictly informational (cybernetic) entities. As such, they have no independent source of energy (needed for maintaining their organisation and performing their computational activity) and they have no physical agency (like brains in a tank, they can think what they like, but will never be able to do anything). In short, cybernetic closure may be sufficient for an autonomous 'mind', but not for an autonomous agent. For that there must be constitutive autonomy - the ability of the system to physically make and maintain itself and interact with its physical environment in a way that is conducive to its maintaining itself in the face of environmental change. The only way to do all that is to have an organisational structure that is closed to efficient causation. This is what Rosen's (M,R)-system is an example of, and the more practically realisable systems from Hofmeyr (2007, 2021) and Gánti's chemoton (which was the illustrative system used in Moreno and Mossio's book).

Put very simply, ontological closure (closure to efficient causation) means that every part of the whole is the result of the actions of the whole and the whole itself is the result of the actions of every part. This leaves the system as a whole to be independent and free from exogenous support* (with the implication of freedom from exogenous control as well). That is why this kind of closure is the guarantee of autonomy.

* Though, of course, it is still dependent on exogenous resources such as an energy supply and materials from which to construct and maintain itself.

Adaptation - cybernetic freedom

We haven't finished yet (recall the ballerina). Suppose there were a system with closure to efficient causation that took nutrients from its environment as raw materials and continuously made itself with them (as in the Hofmayr, 2007 system). Then let the abundance of the nutrients change so that some were much more than needed and others much less. How would it cope? Would it remain autonomous, or would it fail to persist with its self-making function, become materially faulty and die? That is at least a serious risk for it and the only possible response is one of adaptation, which really means having more than one set of internal states available: i.e. to be flexible and adaptive in function. If it has that facility, then it could have a different way of functioning for each set of nutrient concentrations, or even a continuously variable way of adjusting its internal relationships to match the varying environment.

Many bacteria have this facility, for example having more than one metabolic process available and the ability to detect the chemical environment to which each process is best suited and to switch between them.

You will recall the philosopher John Collier (2002) explaining autonomy and identity. In that paper, he further added that for autonomy, the cohesion-system (we will think of the system having closure of efficient causation) must also be able to adapt to its environmental conditions for which it needs "a number of internal states dynamically accessible" (having in mind a range of configurations as responses to environmental conditions). This is of course the cybernetic freedom - the third axis on the graph I showed at the beginning of the article. It is the feature, more than any other, that separates living from non-living things, as the table below shows (but don't be misled by the quantitative precision of those figures, they are just illustrative estimates). Cybernetic freedom is strongly expressed by the mammalian immune system - a sort of cellular and biochemical computer that sorts friend from foe by recognising millions of chemical traits and motifs, it learns and rapidly adapts by constantly transforming almost every aspect of its structure and subjecting itsef to intense selection (in a sense simulating evolution by natural selection). The immune system would also score highly on constitutional independence, only depending on the 'host' body to provide a suitable physical and chemical environment in which to thrive.

Biological Autonomy.

Figures in the table above are just illustrative estimates, but show a clear pattern in the distribution of different axes of autonomy, especially highlighting that living things have considerably more constitutional independence than non-living (with the exception of the star, which in a sense, is entirely self-made). Cybernetic freedom (leeway for actions) is limited in all but higher organisms, though we humans have extended our already very impressive cybernetic freedom with tools, one of which, the AI robot, is budding off as an autonomous system in its own right. All the systems listed have fairly high cybernetic independence, though AI robots may have less by design if they are needed to be strongly affected by their environment. Humans do not score 10 for cybernetic independence because as organisms we are still subject to environmental constraints. That goes for all but the star which is (usually) so dominant in its local environment that nothing significantly affects it. Our constitutional independence does not score a perfect 10 either, because we have inherited so much from our parent's - especially genes. I might even have downgraded that score to 8 in recognition of the large contribution of society to the formation of our minds (The famous behaviourist B.F. Skinner would have set it at no more than 5: that is an active debate).

Reason and purpose - teleology

Quantitative measures like those above leave a false impression, of course, because they cannot explain the qualitative gulf between living and non-living things. The qualitative difference between them is that we cannot explain living without reference to purpose. At the heart of all explanations of life - self-maintaining, self-propagating, adapting and learning - we ascribe a reason and role to every part or system composing life (every organ and every enzyme etc. has a purpose). This is termed teleology and is only tolerated in mainstream science if it can be dismissed as loose and familiar language*. In other words, we are not supposed to mean that e.g. when a bacterium swims towards a sugar concentration it is 'because it aims to get the sugar'; 'it's goal is more sugar', or worst of all, 'it wants the sugar'. We are supposed to really mean that evolution has shaped it in a way that makes it swim towards the sugar. That implies that it is nothing but a little machine that acts only according to its structure, like biochemical clockwork. In this there is no sense of purpose: Aristotle's final or ultimate cause is a figment of our imagination, so the official doctrine goes. Let us pause to consider what that says about human motivations.

* Teleology has become a focal point for those involved in the arcane debate about intelligent design - whether, especially body parts of animals, are structured to perform a purpose (and therefore designed with that purpose in mind) or are just 'lucky accidents' as the theory of natural selection implies. This is a different kind of teleology to the one I am referring to here because no individual organism can 'evolve' a body part, but it can hypothetically have an intention to act in some particular way that is within its powers, for a purpose of its own. Science officially says it cannot have a purpose of its own, only a behavioural algorithm that, by natural selection, suits the circumstances.

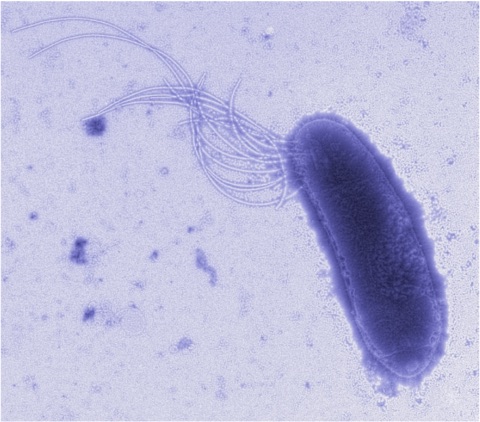

A bacterium (Roseburia species) - swimming towards what it wants ? Image from microbe safari (presumed Dr. Tim King, Rowett Institute, Scotland).

Robert Rosen wrote extensively and critically on the doctrine of science that rules ultimate cause out of order. In his books (Life itself (1991) and Essays on Life itself (2000)), he pointed to a deep floor in scientific thinking that has led to it. A more practical (and superficial) reason for this taboo is that allowing something to have a goal or purpose of its own admits subjectivity - the reason for its behaviour is to do with its own experience - and subjectivity is officially not allowed in science. There is, however, a tradition of allowing it specifically for organisms only as some biologists feel justified in saying “objectivity is achieved through recognising this inherent subjectivity” (Bueno-Guerra, 2018), through the application of von Uexkül’s Umwelt concept: to understand organisms we must see the world as they see it. That is justifiable as a technique, a method of thought, but remains little more than intuition if taken literally.

Indeed, for a system to have a goal implies that a) it is a thing with an identity, without which it is not even meaningful to talk of it having a goal; and b) it can at least in principle be the source of causation, since otherwise any goal would be nothing but a futile wish. That is for two reasons. First, if a goal is to be more than a futile wish, it has to result in some action aimed at achieving it: there must be a physical consequence and for that, efficient causation is necessary. Secondly if this efficient cause arose from anything other than the system possessing the goal, then it would not be the system's goal that motivates it.

Recalling that all efficient cause is physical force constrained by information, we can see that tracing the flow of causation back to its source, must lead us to some information embodied by the goal-enabled system. That information at the origin of the causal chain must be the goal itself, embodied as a cybernetic instruction; a statement of the desired outcome.

In their paper "What makes biological organisation teleological?, Mossio and Bich (2017) identify circularity of causation, particularly constititutive autonomy, as the unique feature of life that produces purpose, goal and function. They identify the overall goal as self-preservation and existence: "Biological organisation determines itself in the sense that the effects of its activity contribute to establish and maintain its own conditions of existence: in slogan form, biological systems are what they do." (Mossio and Bich, 2017). This is more or less what I say with the 'slogan': life is computation, the output of which is itself. So, I think Mossio and Bich were right in so far as closure to efficient causation is necessary for teleology, but I don't believe it is sufficient. The only goal possible from their high-level abstraction is life itself, which is a fair point, but does not get us closer to understanding the way individual organisms originate their actions.

Putting it another way, living for its own sake is an overall goal that all living systems (organisms) share in common; it says nothing about the (different) lesser goals of individual organisms, even though those might ultimately be intended to contribute to the overall goal of life. For autonomy, we are looking for goals that are instantiated uniquely in each organism, goals that inform their individual behaviour from moment to moment. There is an obvious place to look.

For practical living, a system must incorporate goals that at least embody information about the ideal conditions and state needed for life to flourish. That of course is the collection of homeostatic set-points to be found in any living cell. With that simple addition of information, we can explain why the bacterium swims towards higher sugar concentration: it has a set-point for the signal transmitted from sugar detectors (chemical transducers on its surface) and a physical response to change the present signal to become more like the set-point.

In my view, the simple homeostatic control loop - as part of a system closed to efficient causation - is the elemental foundation of all teleological behaviour because it is the cybernetic system which explicitly embodies information (the set-point) to serve as the constraint for physical forces exerted by components of the system, ammounting to efficient cause originating from the system.

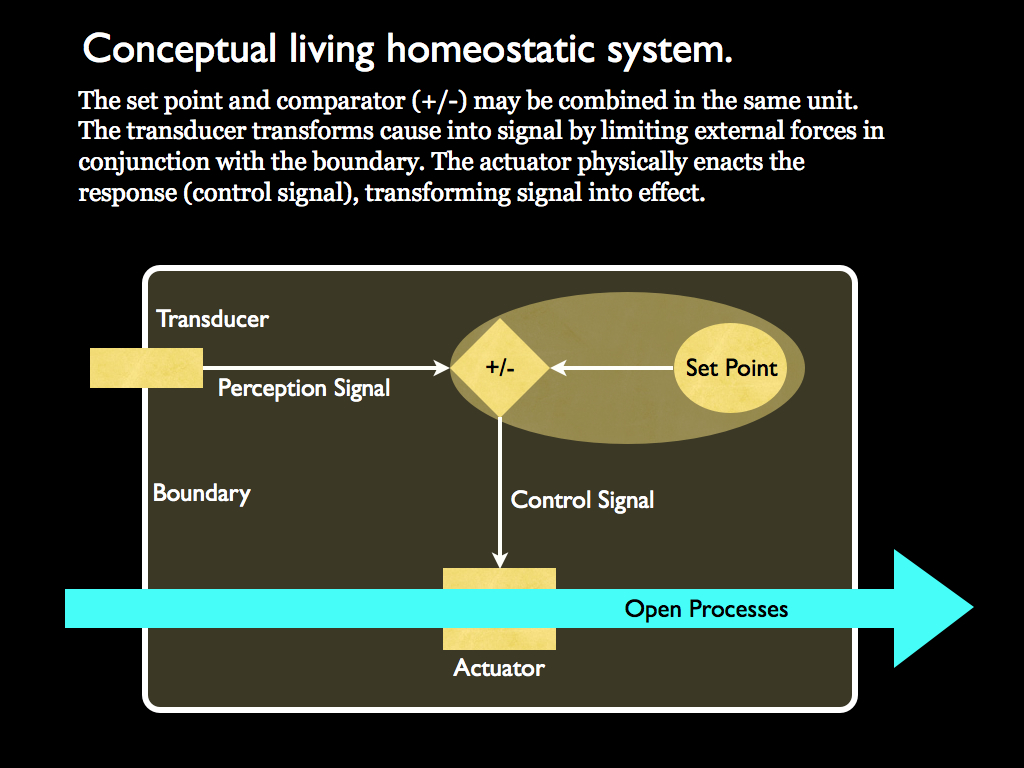

The essential components of a homeostatic system: the set-point and the signals are embodied formal information. Transducer and actuator translate between efficient cause and formal information. Formal information is that which constrains forces to give efficent cause.

Homeostasis is the essence of agency because it definitively arises from a system which has its own intention (embodied by the set-point) and a way to physically enact it through the actuator (e.g. the bacterium's flagella). It can also adjust its action in response to its changing environment using the signal it gets from its surface transducers. All this is made possible only because of the organisational (causal) context in which it is embodied. That context strictly must be one of closure to efficient causation because, as you can see, without that, there can be no differentiation between inside and outside of the system. Our system would have no identity and without an identity the very idea of transducer, set point, perception signal and actuator, all of them make no sense; they cannot be defined without the context of identity. You might think, well, we have control loops just like this with all these components, for example the central heating system of a house, and we do not attribute agency and certainly not identity to them. This is all true, but these are not natural systems, they are tools and all tools are extensions of the people that use them. It is crucially important that the homeostatic system is a part of, and embodied by, a system that is closed to efficient causation. Only natural, living, systems meet both closure to efficent causation and homeostasis requirements.

If you are still with me, then so far we have a system that is closed to efficient causation, so has an identity and also embodies at least one set point for a homeostatic (self-control) loop giving it agency. The homeostasis guarantees non-zero cybernetic independence and the closure to efficient causation ensures non-zero constitutional independence. But what of cybernetic freedom, the third axis of autonomy? In a biological system, the set point has most likely been inherited from the parent (and it is specified in the engineering design of any artifact such as a robot). Some set-point values are so crucial to life in general and life so sensitive to the variable they specify, that this remains the case for all organisms in all circumstances (fiddling with these set-points is fatal). But there are plenty of others which might be context dependent and it would be better to have the facility to adjust them to suit circumstances.

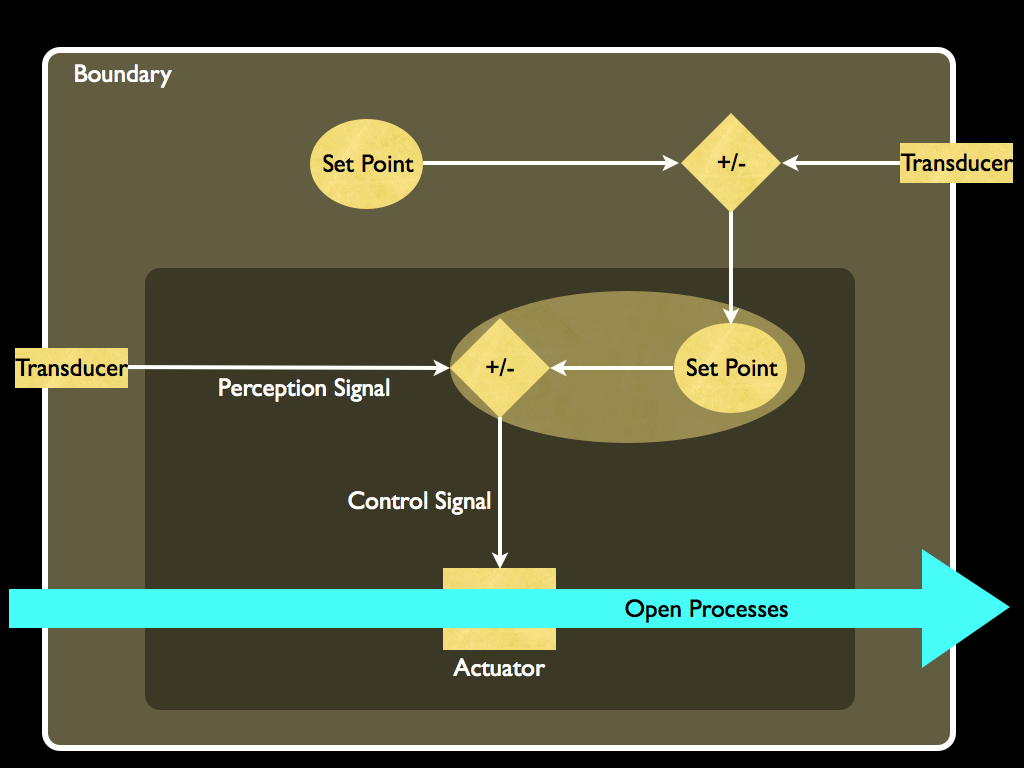

There is a very simple way of achieving that - just by nesting homeostatic control loops, as the next diagram illustrates.

Now you see that the inner set-point is determined from within the system, in fact by the control loop having another set point which is embodied information within the system and so causally originating with the agent (as we may now call this system). Not much imagination is needed to expand this to arbitrarily complicated nested sets of control loops, some of which may control each other to make for a highly flexible and responsive system of self control. This brings me to how the most basic form of autonomy found in cells, indeed in the last common ancestor of all current life, has expanded and elaborated to the point where some organisms (ourselves included!) appear to have executive control their own behaviour, with strategic plans and policies (the hallmarks of what philosophers call 'free will').

Levels of Autonomy: from homeostasis to free-will

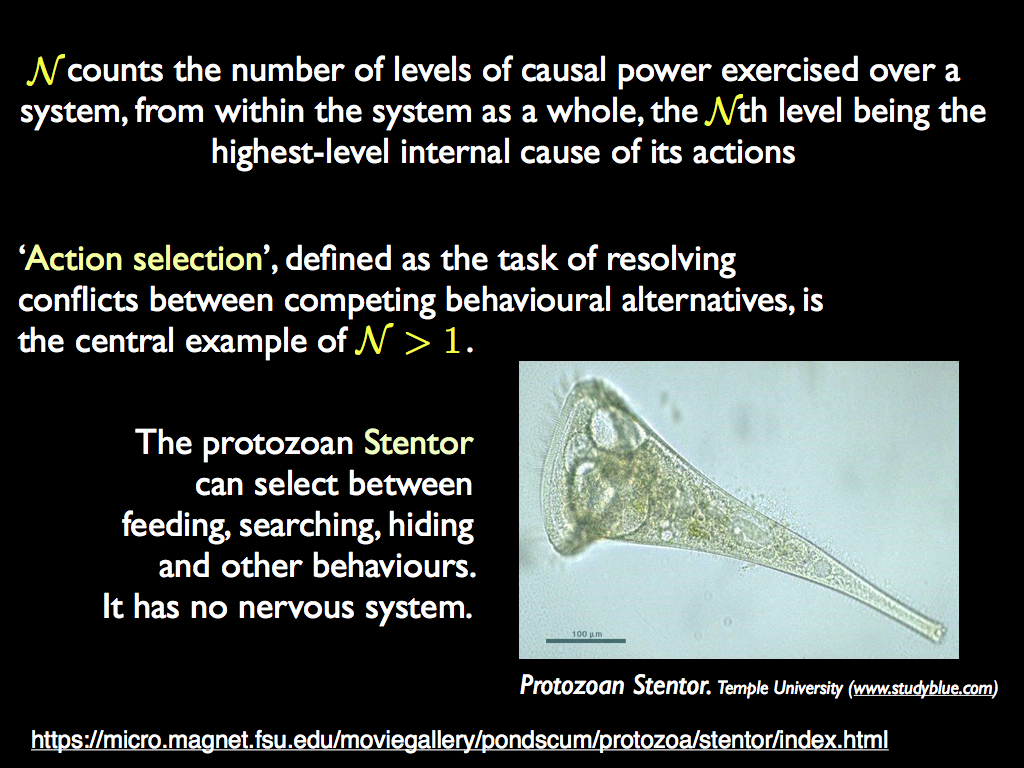

In Farnsworth (2018), I noted that two broad features are jointly necessary for autonomous agency (causal independence): organisational closure and the embodiment of an objective-function providing a `goal': so far only organisms demonstrate both. Organisational closure has been studied (mostly in abstract), especially as cell autopoiesis and the cybernetic principles of autonomy, but the role of an internalised `goal' and how it is instantiated by cell signaling and the functioning of nervous systems has received less attention in studies of autonomy. I aimed to add some biological `flesh' to the cybernetic theory and trace the evolutionary development of step-changes in autonomy: 1) homeostasis of organisationally closed systems; 2) perception-action systems; 3) action-selection systems; 4) cognitive systems; 5) memory supporting a self-model able to anticipate and evaluate actions and consequences. Each stage is characterised by the number of nested goal-directed control-loops embodied by the organism, summarised by a quantity I termed will-nestedness N. Organism tegument (boundary), receptor/transducer system, mechanisms of cellular and whole-organism re-programming and organisational integration, all contribute to causal independence. I was led to the conclusion that organisms are cybernetic phenomena whose identity is created by the information structure of the highest level of causal closure (maximum N), which has increased through evolution, leading to increased causal independence and that this increase can, in principle, be quantified.

Previously, in Farnsworth (2017), I had provided the following definition of free will. Though it does not meet the approval of every modern philosopher, it at least has the virtue of being precise and independent of assumptions concerning the (real or imagined) attributes of healthy (and awake) adult humans. The definition applies to a general agent, which could be anything, natural or technological (hence the title of that work "can a robot have free will).

An agent has 'free will' if all of the following are jointly true:

• FW1: there exists a definite entity to which free-will may (or may not) be attributed;

• FW2: there are viable alternative actions for the entity to select from;

• FW3: it is not constrained in the exercising of two or more of the alternatives;

• FW4: its “will” is generated by non-random process internal to it;

• FW5: in similar circumstances, it may act otherwise according to a different internally generated “will’.

In this definition the term “will” means an intentional plan which is jointly determined by a “goal” and information about the state (including its history) of the agent (internal) and (usually, but not necessarily) its environment. The term “goal” here means a definite objective that is set and maintained internally to the agent. The objective is a fixed point in at least one variable. The set point of a homeostatic system is the most obvious example. An intentional plan is an algorithm that is embodied as (stored) information within the agent. The meaning of 'action' here is the creation of an additional (to those already present) constraint on one or more physical force in the system that includes the agent and may extend arbitrarily beyond it, the practical result of which is a change to the trajectory of the development of the system in time. Examples include movement, changes or stasis (counter to change that otherwise would occur) in concentration of materials such as solutes, and the resultant effects such as maintenance of the agent against decay, changes in colour (think cuttlefish) or perception (e.g. eye pupil dilation), production of antibodies, alteration of physiology (e.g. root / shoot ratio in plants) and so on.

Having read up to this point, you will see that FW1 addresses the question of identity; FW2 incorporates cybernetic freedom; FW3 ensures cybernetic independence and FW4 identifies the source of internal control as embodied information: specifically a goal for at least one set-point. FW5 addresses a central question in the philosophy of free will - to act otherwise in similar circumstances ensures that the system is not merely an algorithm, but this is in fact extremely hard to establish and so far my efforts would not convince many 'traditional' philosophers.

At the time of writing it, the list of criteria (FW1-FW5) was chosen to address the main features that most philosophers have thought important (though they do not necessarily agree with one another about what is important and some philosophers would leave out some items of the list). FW2 and FW5 are intended to examine the effect of determinism and FW4 represents the “source arguments” for and against free will, whist FW3 ensures freedom in the most obvious (superficial) sense. Only one of the list (FW1) is not usually included in any philosophical discussion of free will, perhaps because it is usually considered to be self evident, but it plays an important role in this case because we are not assuming anything about the agent, in particular we are not assuming that it is a self-coherent whole (more precisely an organisationally closed system). This is important because, as the 2017 paper demonstrates, organisational closure is a necessary condition for free will as it is defined here.

The implication of agency is that a system to which it is attributed acts in a way that is systematic (not random) and such that the state (and maybe history of states) of the system (agent) is one of the determinants of the system’s behaviour. More specifically, given the current state, the next state of the system is not random, not wholly determined by exogenous control, nor intrinsic to its structure (as in clockwork), but is at least partly determined by its present and (optionally) one or more of its previous states. The proximate cause of an action taken by an agent with agency is identified as its ‘will’. This proximate cause is not merely mechanism, it is the result of information with causal power rather than just deterministic effective cause (see discussion of causes).

A theory of self-control

This idea of will is a development of my own theory of self-control, briefly stated as follows.

1. Self-control is the essential and elemental property of reactive autonomy. Reactive autonomy is the capacity to appropriately respond to environmental change by making internal changes that enable the performance of overall function to continue near optimally, i.e. it is functional action that is not mere isolated action. Isolated action is the performance of a function independently of external control: for example the action of an engine. Independence refers to control, not to the supply of resources such as energy and materials. Self-control means the internal adjustments needed to express reactive autonomy are enacted by internal processes independently of external control.

2. The most elementary form of self-control is homeostasis. In engineering control theory, this can be achieved using any one or a combination of feed-back and feed-forward systems, which may feature both positive and negative internal control signals. The most basic form of these is the negative feed-back control system which may be any one or a combination of proportional, integrative or derivative control (all together known as PID control systems).

3. In engineering design of controllers of all kinds, an essential feature is the set-point, or reference signal that determines the ‘goal’ of the control system: a point (member) in the set of possible values of the controlled variable. In engineering, this set-point is specified as part of the control system design, but it may be (variably) specified by a higher order control system, in which case it is the controlled variable of that higher order system (hierarchies of control systems in which higher levels control lower via their set-points are termed cascades). The set point is in general a specific item of information: specifying the particular from a range of possibilities.

4. In natural (living) biochemical control systems, homeostasis can be quantified by the properties of a ‘chair’ function plotted with the controlled variable on the y-axis and the environmental variable (or manipulated variable) on the x-axis, so that y remains close to constant (the value of the set-point) over a range of x. Systems which produce this phenomenon can be written as solutions to differential equations describing reaction kinetics and properties such as the points of ‘escape from homeostasis’ (the leg and back of the chair shape) and the ‘homeostatic plateau’ (the seat of the chair).

5. In a parallel development, control system templates have been identified and analysed using linked differential equation models and used to interpret observed control structures in cellular biochemistry. The emphasis of these studies has been to characterise the dynamic behaviour of the control systems. An important result of this work is that the set-points are typically to be found in the precise values of reaction rates (note substrate inhibition also), which in turn are the consequence of specific molecular forms, particularly the forms of enzymes catalysing the reactions. From this we can deduce that the specific information that is the set-point is embodied in the form of especially enzyme molecules, which in turn is encoded by DNA / RNA, possibly with support from chaperone and other ancillary molecules.

6. Proactive autonomy can only be enacted by anticipatory systems which have the capacity for reactive autonomy and also have internalised a model of the self and its environment. The difference between the ‘clockwork’ automaton (e.g. bacterial chemotaxis) and free agency (showing initiative, such as a bird in flight) is the difference between reactive and proactive autonomy. It is in principle possible for the model of the self to be implemented in any ‘hardware’, including biochemical reaction networks. In practice, it is most likely to be found implemented by neural networks (both in life and in the artificial domain). Possibly (untested and unproven), the system implementing the model must be as flexible as a universal Turing machine, though this is not a particularly onerous condition in practice.

7. Initiative (the ability to change or initiate a behaviour independent of external stimulus or ‘pre-programmed control’) is the main emergent property of proactive autonomy. We might call it behavioural autonomy, or better still, anticipatory behavioural autonomy (ABA) to emphasise the rational intention of the initiative (anticipation is taken to imply a rational basis for choice).

How Autonomy is built up (in organisms)

Starting from nothing, how can any system build itself up to a level of autonomy that creates anticipatory behavioural autonomy, leading to the phenomena of free-will? The answer can only be by a process of boot-strapping in which an organisationally closed system acquires information from its environment and embodies it either structurally (as part of the system of constraints which organises its causal repertoire), or as a memory (embodied information serving as formal cause, e.g. DNA, or neurally encoded memories).

Boot-strapping appears to be the creation of new control and structure from nothing (like magic) but in fact every known example is one of selecting and incorporating (literally as in embodying) information from the environment. It has not yet been proved, but I strongly suspect that this is logically necessary and further that a system closed to efficient causation can only exist if it has incorporated information selected from the environment, over and above that which constitutes its structure.

Rather a lot of internalised information is necessary for the creation of a dynamic model of the self and for it to be dynamic (i.e. responding to change), the model needs to be sufficiently flexible to represent all likely states in anticipation of their occurance. That is the practical reason a universal Turing machine is likely to be needed, though there is a deeper theoretical reason too. For certain, the (self-) control system needed for proactive autonomy must be structured as a nested set of control loops (and this is what has been identified in the neural circuitry of organisms including arthropods and, of course, vertebrates. This nested structure matches the will-nestedness concept used to explain action selection.

In Farnsworth (2018) I concluded that organism identity is created by the information structure existing at the highest level of causal closure at which the highest level of will-nestedness is identified and that this coincides with the ‘maximally irreducible cause-effect structure’ defined in IIT (Integrated Information Theory, Oizumi et al 2014).

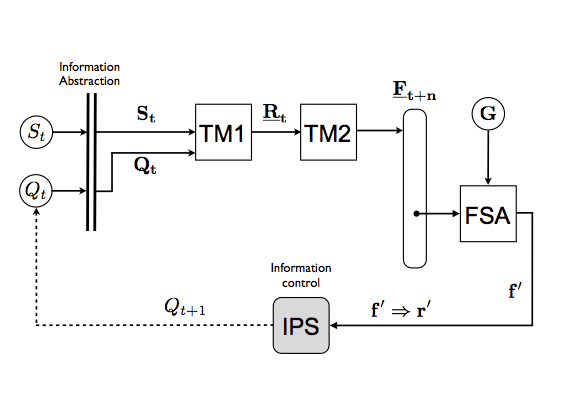

The "free will machine" (above diagram from Farnsworth 2017) is a kind if cybernetic structure intended to illustrate the requirements for free will as some philosophers define it. The term 'machine' follows the convention in cybernetics of calling information processing devices machines. This one generates predictions of its own state in alternative futures Ft+n (calculated by Turing Machine TM2), having built an internal representation of itself interacting with its environment (this representation is created by TM1). It selects the optimal response from among possible responses at time t (Rt), using a goal-bases criterion G, within the Finite State Automaton (FSA), in which the goal is internally determined. IPS is the implementation of the selection in the physical system - a translation of information (the optimal future f' into optimal action r' which changes the system's state from Qt to Qt+1 .

First application - a theory of pain.

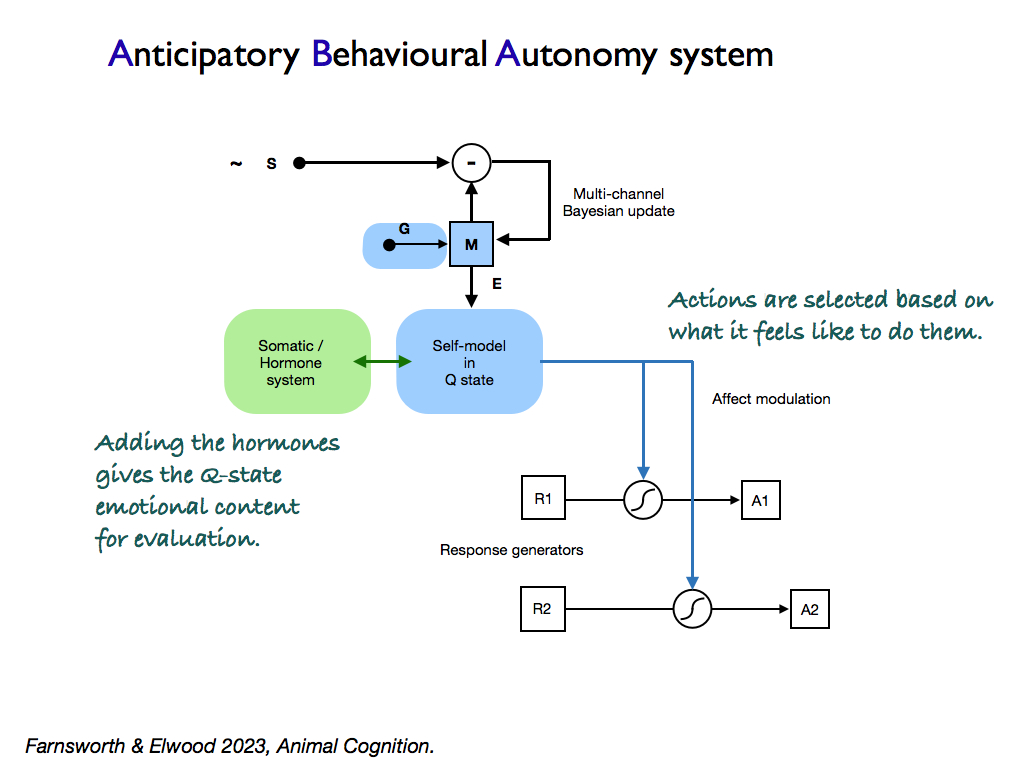

Early in 2023, Keith Farnsworth and Bob Elwood (Emeritus Professor and expert on pain in animals) made the first application of this model in developing a new theory of pain and suffering. The idea was that pain is not a signal to indicate actual or potential damage to the body, but rather was an imperative (command) to attend to the source of perceived damage. In the paper (Farnsworth and Elwood, 2023), it was argued that such an imperative was only useful (biologically adaptive) for an organism that had a free choice of what to do. So the search was on to identify what kinds of organisms have this sort of free choice, since only they would likely feel pain (as opposed to mere nociception). The search resulted in separating self-control by an algorithm (or mechanism) that could be adequately represented as a Finite State Automaton (FSA), from those that could not. The latter had the ability to predict future states of the organism and appraise them in terms of a common currency that represented the overall hedonic value of each. It was able to select the best from this and incorporated this generalised appraisal into its internal control system along with all the incoming signals from receptors and memories. This system was termed an Anticipative Behavioural Autonomy (ABA) system. Analysis of empirical findings showed that it is probably possessed by many organisms other than vertebrates and certainly posessed by all vertebrates.

Philosophers' skepticism

None of what has been said so far would be recognisable to mainstream philosophers who debate autonomy already assuming the subject of examination is the human being and more particularly the human mind. They assume the subject of their inquiry has the ability to make decisions and act in various ways according to its will - that is the starting point of many of their arguments on free will. One who takes an intermediate position between the general notion of autonomy that we are most interested in here and the, shall I call it classical idea of human autonomy, is the philosopher Daniel Dennett. One way, he suggests, of telling that something has more than zero autonomy is to test whether his intentional stance is useful in explaining its behaviour. That is, if explaining its behaviour is helped by considering what it would do if it were a rational agent with a goal and what Dennett called 'beliefs' about its situation. Beliefs might be generalised to 'having a model of the environment', which may include a model of itself (the internal environment). We already know that if belief is replaced by the more technical term of model, which is information representing another system or part of a system that is embodied in the host system, then this could apply to a large category of life-forms. The internal model of self is what Turing machines 1 and 2 in the diagram above are responsible for. Notice again that this notion presupposes that a coherent identity exists to have an embodied model and also that this only concerns the issue of motivation, i.e. the goal of an agent, which is a matter of cybernetic control and to some extent the adaptation/fixation of the goals. It does not address the auto/hetero-manufacture (constitution) question, or the fundamental matter of identity. To put it bluntly, I think classical philosophical approaches to autonomy are unduly constrained in outlook and miss crucially important aspects of its necessary conditions. Most strikingly, they pre-suppose that the agent of autonomy is the human mind and thereby commit the homunculus fallacy [see my blog post on that].

References

Albantakis, L. Quantifying the Autonomy of Structurally Diverse Automata: A Comparison of Candidate Measures. Entropy (2021). 23,1415. https://doi.org/10.3390/ e23111415

Boden, M. (2008). Autonomy: What is it? Biosystems, 91 305-308.

Bueno-Guerra, N. (2018). How to apply the concept of umwelt in the evolutionary study of cognition. Frontiers in Psychology 9, doi: 10.3389/fpsyg.2018.02001.

Collier, J. (2002). What is autonomy?. International Journal of Computing Anticipatory Systems: CASY 2001 Fifth International Conference. Vol. 20.

Bertschinger, N.; Olbrich, E.; ay, N.; Jost, J. (2006). Information and closure in systems theory. German Workshop on Artificial Life 7, Jena, July 26 - 28, 2006: Explorations in the complexity of possible life, 9-19.

Bertschinger, N.; Olbrich, E.; ay, N.; Jost, J. (2008). Autonomy: An information theoretic perspective. Bio Systems, 91, 331–45.

Effingham, N. An Introduction to Ontology; Polity Press: Cambridge, UK, 2013.

Ellis, G. F. R. and Kopel, J. (2019). The Dynamical Emergence of Biology From Physics: Branching Causation via Biomolecules. Front. Physiol. 9:1966. doi: 10.3389/fphys.2018.01966.

Ginsborg, H. (2006). Kant’s biological teleology and its philosophical significance. – In: Bird, G. (ed.), A companion to Kant. Blackwell, pp. 455–470.

Farnsworth, K.D. (2017). Can a Robot Have Free Will? Entropy. 19, 237; doi:10.3390/e19050237

Farnsworth, K.D.; Albantakis, L.; Caruso, T. (2017). Unifying concepts of biological function from molecules to ecosystems. Oikos, doi: 10.1111/oik.04171.

Farnsworth, K.D. (2018). How organisms gained causal independence and how to quantify it. Biology.

Farnsworth, K.D. (2023). How biological codes break causal chains to enable autonomy for organisms. Biosystems. 232. 105013.

Farnsworth, K.D. and Elwood, R.W. (2023). Why it hurts: with freedom comes the biological need for pain. Animal Cognition. 26. 1259-1275.

Griffith, S., Goldwater, D., Jacobson, J.M. (2005). Self-replication from random parts. Nature. 437. p636. doi:10.1038/437636a

Guyer, P. (1998). Design and autonomy. Kant, Immanuel (1724-1804). Routledge Encyclopedia of Philosophy, Taylor Francis. doi:10.4324/9780415249126-DB047-1

Hofmeyr, J.H.S. (2007). Systems biology: philosophical foundations.. Elsevier, Amsterdam. The biochemical factory that autonomously fabricates itself: a systems biological view of the living cell. Systems biology: philosophical foundations. pp. pp 217–242.

Hofmayr, J-H. S. A biochemically-realisable relational model of the self manufacturing cell. (2021). Biosystems. 207:104463. doi:10.1016/j.biosystems.2021.104463

Montévil, M. and Mossio, M. (2015). Biological organisation as closure of constraints. J. Theor. Biol., 372:179–191, 2015.

Moreno, A.; Mossio, M. (2015) Biological Autonomy: A Philosophical and Theoretical Enquiry. In History, Philosophy and Theory of the Life Sciences; Springer: Dordrecht, The Netherlands. Volume 12.

Mossio, M. and Bich, L. (2017). What makes biological organisation teleological? Synthese. 194: 1089-1114.

Oizumi, M.; Albantakis, L.; Tononi, G. (2014). From the Phenomenology to the Mechanisms of Consciousness: Integrated Information Theory 3.0. PLoS Comput. Biol. 10, e1003588.

Kauffman, S. A. and Clayton, P. (2006). On emergence, agency and organisation. – Phil. Biol. 21: 501–521.

Rosen, R. (1991). Life itself: A comprehensive inquiry into the nature, origin and fabrication of life; Columbia University Press: New York, USA.

Rosen, R. (2000). Essays on Life Itself (postumous). Columbia University Press: New York, USA.

Sharov, A.A. (2010). Functional Information: Towards Synthesis of Biosemiotics and Cybernetics. Entropy, 12, 1050–1070.